|

| Apple defends its way of preventing child abuse |

With a new FAQ page, Apple is trying to allay fears that its new anti-child abuse measures could turn into oversight by an authoritarian government.

"This technology is limited to recognizing CSAM materials stored in iCloud," the company wrote. We will not accept any government requests for renewal.

The company's new tools include two child protection features:

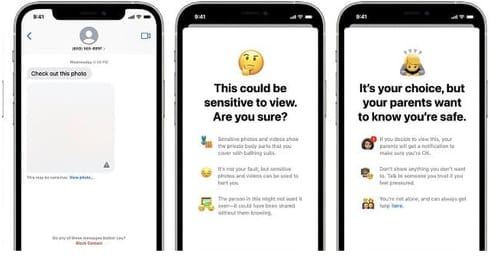

- The first is the connection security feature, which uses machine learning across devices to identify and hide child pornography in messaging apps. If a child under 12 years old wants to see or send this photo, they can tell their parents about it.

- The second feature is designed to detect child pornography by scanning users' photos when uploaded via iCloud. If child pornography is detected, the company will be notified. This will alert the authorities when they check for the presence of this material.

The plan soon encountered opposition from digital privacy groups and activists. Who thinks these provide a back door to enterprise software?

These groups suggest that once such a backdoor exists, it can be expanded to find types of content beyond child sexual abuse material.

Authoritarian governments can use it to study politically contradictory documents. The Electronic Frontier Foundation writes that even a narrow, carefully documented and carefully researched tailgate is still a back door.

She added, "One of the techniques originally developed to filter and segment child sexual abuse images has been reused to create a database of terrorist content that contributing companies can access to identify abuse to prevent it."

However, the iPhone maker claims that they have adequate security in place to prevent their system from being used to detect anything other than sexual assault recordings.

Apple defends its way of preventing child abuse

He said the list of banned photos was provided by the National Center for Missing and Exploited Children (NCMEC) and other child safety organizations. This system applies only to CSAM image hashes provided by NCMEC and other child protection organizations.

You have indicated that they will not be added to this image hash list. The list is the same on all iPhones and iPads to prevent individual targeting of users.

The company said it rejected the government's request to include non-CSAM images on the list. "We've faced government-imposed changes before and those changes will violate user privacy, and we've always denied these requests," she said. We will continue to reject it in the future.

It should be noted that despite the assurances given by Apple in the past, he made concessions to the government to continue doing business in his country.

iPhones without FaceTime are sold in countries where encrypted phone calls are not allowed. In China, it has deleted thousands of apps from its App Store. It also transmits user data to a government communications server.

The company failed to address some concerns about the feature searching for pornographic content.

He said that the feature will not provide any information to Apple or law enforcement agencies. However, it does not explain how to ensure that the tool focuses only on porn.

The Electronic Frontier Foundation writes that whatever it takes is to expand a narrow backdoor, expand machine learning settings to find other types of content, or change metrics to analyze n accounts. It doesn't matter who, not just the kids.